Decoding Auditory Imagery with Multivoxel Pattern Analysis

Overview

Source: Laboratories of Jonas T. Kaplan and Sarah I. Gimbel—University of Southern California

Imagine the sound of a bell ringing. What is happening in the brain when we conjure up a sound like this in the "mind's ear?" There is growing evidence that the brain uses the same mechanisms for imagination that it uses for perception.1 For example, when imagining visual images, the visual cortex becomes activated, and when imagining sounds, the auditory cortex is engaged. However, to what extent are these activations of sensory cortices specific to the content of our imaginations?

One technique that can help to answer this question is multivoxel pattern analysis (MPVA), in which functional brain images are analyzed using machine-learning techniques.2-3 In an MPVA experiment, we train a machine-learning algorithm to distinguish among the various patterns of activity evoked by different stimuli. For example, we might ask if imagining the sound of a bell produces different patterns of activity in auditory cortex compared with imagining the sound of a chainsaw, or the sound of a violin. If our classifier learns to tell apart the brain activity patterns produced by these three stimuli, then we can conclude that the auditory cortex is activated in a distinct way by each stimulus. One way to think of this kind of experiment is that instead of asking a question simply about the activity of a brain region, we ask a question about the information content of that region.

In this experiment, based on Meyer et al., 2010,4 we will cue participants to imagine several sounds by presenting them with silent videos that are likely to evoke auditory imagery. Since we are interested in measuring the subtle patterns evoked by imagination in auditory cortex, it is preferable if the stimuli are presented in complete silence, without interference from the loud noises made by the fMRI scanner. To achieve this, we will use a special kind of functional MRI sequence known as sparse temporal sampling. In this approach, a single fMRI volume is acquired 4-5 s after each stimulus, timed to capture the peak of the hemodynamic response.

Procedure

1. Participant recruitment

- Recruit 20 participants.

- Participants should be right-handed and have no history of neurological or psychological disorders.

- Participants should have normal or corrected-to-normal vision to ensure that they will be able to see the visual cues properly.

- Participants should not have metal in their body. This is an important safety requirement due to the high magnetic field involved in fMRI.

- Participants should not suffer from claustrophobia, since the fMRI requires lying in the small space of the scanner bore.

2. Pre-scan procedures

- Fill out pre-scan paperwork.

- When participants come in for their fMRI scan, instruct them to first fill out a metal screen form to make sure they have no counter-indications for MRI, an incidental-findings form giving consent for their scan to be looked at by a radiologist, and a consent form detailing the risks and benefits of the study.

- Prepare participants to go in the scanner by removing all metal from their body, including belts, wallets, phones, hair clips, coins, and all jewelry.

3. Provide instructions for the participant.

- Tell the participants that they will see a series of several short videos inside the scanner. These videos will be silent, but they may evoke a sound in their "mind's ear." Ask the participant to focus on and encourage this auditory imagery, to try to "hear" the sound as best as they can.

- Stress to the participant the importance of keeping their head still throughout the scan.

4. Put the participant in the scanner.

- Give the participant ear plugs to protect their ears from the noise of the scanner and ear phones to wear so they can hear the experimenter during the scan, and have them lie down on the bed with their head in the coil.

- Give the participant the emergency squeeze ball and instruct them to squeeze it in case of emergency during the scan.

- Use foam pads to secure the participants head in the coil to avoid excess movement during the scan, and remind the participant that it is very important to stay as still as possible during the scan, as even the smallest movements blur the images.

5. Data collection

- Collect high-resolution anatomical scan.

- Begin functional scanning.

- Synchronize the start of stimulus presentation with the start of the scanner.

- To achieve the sparse temporal sampling, set the acquisition time of an MRI volume to 2 s, with a 9-s delay between volume acquisitions.

- Present the silent videos via a laptop connected to a projector. The participant has a mirror above their eyes, reflecting a screen at the back of the scanner bore.

- Synchronize the start of each 5-s video clip to begin 4 s after the previous MRI acquisition starts. This will ensure that the next MRI volume is acquired 7 s after the start of the video clip, to capture the hemodynamic activity that corresponds to the middle of the movie.

- Present three different silent videos that evoke vivid auditory imagery: a bell swinging back and forth, a chainsaw cutting through a tree, and a person playing a violin.

- In each functional scan, present each video 10 times, in random order. With each trial lasting 11 s, this will result in a scan 330 s (5.5 min) long.

- Perform 4 functional scans.

6. Data analysis

- Define a Region of Interest (ROI).

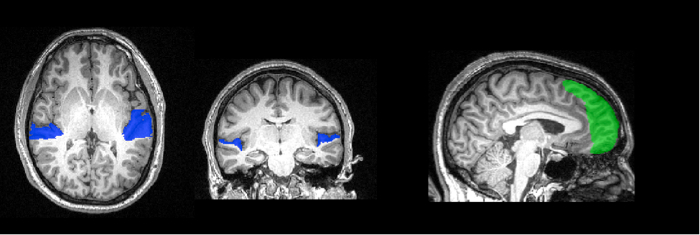

- Use the high-resolution anatomical scan of each participant to trace the voxels that correspond to the early auditory cortex (Figure 1). This corresponds to the surface of the temporal lobe, called the planum temporale. Use the anatomical features of each person's brain to create a mask specific to their auditory cortex.

Figure 1: Region of interest tracing. The surface of the planum temporale has been traced on this participant's high-resolution anatomical image, and is shown here in blue. In green is the control mask of the frontal pole. These voxels will be used for MVPA analysis.

- Pre-process the data.

- Perform motion correction to reduce motion artifacts.

- Perform temporal filtering to remove signal drifts.

- Train and test the classifier algorithm.

- Divide the data into training and testing sets. Training data will be used to train the classifier, and the left-out testing data will be used to assess what it has learned. To maximize the independence of training and testing data, leave out data from one functional scan as the testing set.

- Train a Support Vector Machine algorithm on the labeled training data from auditory cortex in each subject. Test the classifier's ability to correctly guess the identity of the unlabeled testing set, and record the classifier's accuracy.

- Repeat this procedure 4 times, leaving out each scan as testing data each time. This type of procedure, in which each section of the data is left out once, is called cross-validation.

- Combine classifier accuracies across the 4 cross-validation folds by averaging.

- Statistical testing

- To determine if the classifier is performing better than chance (33%), we can compare results at the group level to chance. To do this, gather the accuracies for each subject, and test that the distribution is different from chance using a non-parametric Wilcoxon Signed-Rank test.

- We can also ask whether the classifier is performing better than chance for each individual. To determine the probability of a given accuracy level in chance data, create a null distribution by training and testing the MVPA algorithm on data whose labels have been randomly shuffled. Permute the labels 10,000 times to create a null distribution of accuracy values, and then compare the actual accuracy value to this distribution.

- To demonstrate the specificity of the information within the auditory cortex, we can train and test the classifier on voxels from a different location in the brain. Here, we will use a mask of the frontal pole, taken from a probabilistic atlas and warped to fit each subject's individual brain.

Results

The average classifier accuracy in the planum temporale across all 20 participants was 59%. According to the Wilcoxon Signed-Rank test, this is significantly different from chance level of 33%. The mean performance in the frontal pole mask was 32.5%, which is not greater than chance (Figure 2).

Figure 2. Classification performance in each participant. For three-way classification, chance performance is 33%. According to a permutation test, the alpha level of p < 0.05 corresponds to 42%.

The permutation test found that only 5% of the permutations achieved accuracy greater than 42%; thus, our statistical threshold for individual subjects is 42%. Nineteen of the 20 subjects had classifier performance significantly greater than chance using voxels from the planum temporale, while none had performance greater than chance using voxels from the frontal pole.

Thus, we are able to successfully predict from patterns of activity in auditory cortex which of the three sounds the participant was imagining. We were not able to make this prediction based on activity patterns from the frontal pole, suggesting that the information is not global throughout the brain.

Application and Summary

MVPA is a useful tool for understanding how the brain represents information. Instead of considering the time-course of each voxel separately as in a traditional activation analysis, this technique considers patterns across many voxels at once, offering increased sensitivity compared with univariate techniques. Often a multivariate analysis uncovers differences where a univariate technique is not able to. In this case, we learned something about the mechanisms of mental imagery by probing the information content in a specific area of the brain, the auditory cortex. The content-specific nature of these activation patterns would be difficult to test with univariate approaches.

There are additional benefits that come from direction of inference in this kind of analysis. In MVPA we start with patterns of brain activity and attempt to infer something about the mental state of the participant. This kind of "brain-reading" approach can lead to the development of brain-computer interfaces, and may allow new opportunities for communication with those with impaired speech or movement.

Skip to...

Videos from this collection:

Now Playing

Decoding Auditory Imagery with Multivoxel Pattern Analysis

Neuropsychology

6.5K Views

The Split Brain

Neuropsychology

68.6K Views

Motor Maps

Neuropsychology

27.7K Views

Perspectives on Neuropsychology

Neuropsychology

12.2K Views

Decision-making and the Iowa Gambling Task

Neuropsychology

33.1K Views

Executive Function in Autism Spectrum Disorder

Neuropsychology

18.0K Views

Anterograde Amnesia

Neuropsychology

30.5K Views

Physiological Correlates of Emotion Recognition

Neuropsychology

16.4K Views

Event-related Potentials and the Oddball Task

Neuropsychology

27.7K Views

Language: The N400 in Semantic Incongruity

Neuropsychology

19.7K Views

Learning and Memory: The Remember-Know Task

Neuropsychology

17.3K Views

Measuring Grey Matter Differences with Voxel-based Morphometry: The Musical Brain

Neuropsychology

17.4K Views

Visual Attention: fMRI Investigation of Object-based Attentional Control

Neuropsychology

42.3K Views

Using Diffusion Tensor Imaging in Traumatic Brain Injury

Neuropsychology

16.9K Views

Using TMS to Measure Motor Excitability During Action Observation

Neuropsychology

10.3K Views

Copyright © 2025 MyJoVE Corporation. All rights reserved