Method Article

A Real-Time Interactive System for Studying Confrontational Pursuit Behavior in Rodents

In This Article

Summary

Here, we present a protocol to detect and quantify predatory pursuit behavior in a mouse model. This platform provides a new research paradigm for studying the dynamics and neural mechanisms of predatory pursuit behavior in mice and will provide a standardized platform for studying pursuit behavior.

Abstract

Predatory pursuit behavior involves a series of important physiological processes, such as locomotion, learning, and decision-making that are critical to the success of an animal in capturing prey. However, there are few methods and systems for studying predatory pursuit behavior in the laboratory, especially in mice, a commonly used mammalian model. The main factors limiting this research are the uncontrollability of live prey (e.g., crickets) and the challenge of harmonizing experimental standards. The goal of this study was to develop an interactive platform to detect and quantify predatory pursuit behaviors in mice on a robotic bait. The platform uses computer vision to monitor the relative positions of the mouse and robotic bait in real time to program the motion patterns of the robotic bait, and the interactive two-dimensional sliders magnetically control the movement of the robotic bait to achieve a closed-loop system. The robotic bait is able to evade approaching hungry mice in real-time, and its escape speed and direction can be adjusted to mimic the predatory pursuit process in different contexts. After a short period of unsupervised training (less than two weeks), the mice were able to perform the predation task with a relatively high efficiency (less than 15 s). By recording kinematic parameters such as speed and trajectories of the robotic bait and the mice, we were able to quantify the pursuit process under different conditions. In conclusion, this method provides a new paradigm for the study of predatory behavior and can be used to further investigate the dynamics and neural mechanisms of predatory pursuit behavior.

Introduction

The pursuit of prey by predators is not only a vivid demonstration of the struggle for survival but also a key driver of species evolution, maintaining the ecological balance and energy flow in nature1,2. For predators, the activity of pursuing prey is a sophisticated endeavor that involves a variety of physiological processes. These processes include the motivational states that drive the predator to hunt3, the perceptual abilities that allow it to detect and track prey4,5,6, the decision-making abilities that dictate the course of the hunt7, the locomotor function that enables the physical pursuit8,9 and the learning mechanisms that refine hunting strategies over time10,11. Therefore, predatory pursuit has received much attention in recent years as an important and complex behavioral model.

As a widely used mammalian model in the laboratory, mice have been documented to hunt crickets both in their natural habitat and in laboratory studies12. However, the diversity and the uncontrollability of live prey in quantifying predatory pursuit behavior limits the reproducibility of experiments as well as the exchange of comparisons between different laboratories13. First, cricket strains may be different among laboratories, resulting in differences in prey characteristics that could influence pursuit behavior. Second, individual crickets have unique characteristics that may affect the outcome of predatory interactions14. For example, the escape speed of each cricket may be different, leading to variability in the pursuit dynamics. Additionally, some crickets may have a short warning distance, which could lead to a lack of pursuit process, as the predator may not have the opportunity to engage in pursuit. Finally, some crickets may exhibit defensive, aggressive behavior when stressed, which complicates the interpretation of experimental data15. It is difficult to determine whether changes in predator behavior are due to the defensive strategies of the prey or are inherent to the predator's behavioral patterns. This blurred line between prey defense and predator strategies adds another layer of complexity to the study of predatory pursuit.

Recognizing these limitations, researchers have turned to artificial prey as a means of controlling and standardizing experimental conditions16,17. Seven rodent species, including mice, have been shown to exhibit significant predatory behavior toward artificial prey13. Therefore, a controllable robotic bait may be feasible in the study of predatory pursuit behavior. By designing different artificial prey, researchers can exert a level of control over experimental conditions, which is not possible with live prey18,19. In addition, a small number of previous studies have used artificially controlled robotic fish or prey to study schooling behavior and predation in fish15,17,19. These studies have highlighted the value of robotic systems in providing consistent, repeatable, and manipulable stimuli for experimental research, but despite these advances, the field of rodent behavior, particularly in mice, lacks a dedicated platform for detecting and quantifying predatory chasing behavior using robotic bait.

Based on the above reasons, we designed an open-source real-time interactive platform to study predatory pursuit behavior in mice. The robotic bait in the platform can escape from the mice in real-time, and the robotic bait is highly controllable, so we can set different escape directions or speeds to simulate different predation scenarios. A Python program on the computer was used to generate the motion parameters of the robotic prey, which was combined with an STM32 microcontroller to drive the servo motors and control the motion of the robotic decoy. The modular hardware system can be adapted to the specific laboratory environment in real-time, and the software system can adjust the difficulty of the system as well as the indicators to better serve the research purpose according to the experimental needs. The lightweight system allows for a significant reduction in computer processing time, which is essential for the effectiveness of the system and improves its portability. The platform supports the following technical features: flexible and controllable artificial prey for easy repetition and modeling; maximum simulation of the hunting process in a natural environment; real-time interaction and low system latency; the scalability of hardware and software as well as scalability; cost-effectiveness and ease of use. Using this platform, we have successfully trained mice to perform predatory tasks under various conditions and have been able to quantify parameters such as trajectory, speed, and relative distance during predatory pursuit. The platform provides a rapid method for establishing a predatory pursuit paradigm to further investigate the neural mechanisms behind predatory pursuit.

Protocol

Adult C57BL/6J mice (male, 6-8 weeks old) are provided by the Army Medical University Laboratory Animal Center. All experimental procedures are performed in accordance with institutional animal welfare guidelines and are approved by the Animal Care and Use Committee of the Army Medical University (No. AMUWEC20210251). Mice are housed under temperature-controlled conditions (22-25°C) with a 12-h reverse light/dark cycle (lights on 20:00-8:00) and free access to food and water.

1. Hardware preparation for real-time interactive platform

- Mount a webcam on a crossbar above the entire platform to monitor the positions of the mouse and robotic bait in the arena below in real-time and transmit the images to the computer (Figure 1A).

- Design a large circular arena (800 mm diameter, 300 mm height) consisting of a square acrylic panel at the bottom and an acrylic tube as a border. Place evenly spaced four icons (square, triangle, circle, and cross) on the interior walls of the arena to serve as visual cues (Figure 1B).

NOTE: The inside wall of the acrylic tube and the surface of the square acrylic plate need to be covered with an opaque sticker. - Use a round neodymium magnet (40 mm diameter, 10 mm height, crickets are about 2-4 cm in length4,22) with food pellets (5 × 0.2 mg) as a robotic bait. Attach a blue sticker to the surface of the magnet for identification and location.

- Mount a two-dimensional slider (with an effective travel of 1000 mm) under the arena. Install another neodymium magnet on its carrier as a pulling magnet to magnetically and remotely pull the robotic bait.

- Drive the puller magnet by servo motors controlled by an STM32 board and a switching circuit. Using the speed-direction mode to drive a servo motor (75 mm per 16,000 pulses), the frequency of the PWM wave at the output port on the STM32 board encodes the speed, and the high or low levels (3.3 V or 0 V) encode the forward or reverse direction (Figure 1C).

NOTE: The switching circuit is shown in Supplementary Figure 1, which only shows the speed and direction signal connection diagram required for the movement of one slide and the control of the other slide in the same way. The ground wires in the two slide control circuits can be connected together.

2. Software design for real-time interactive platform

- Use the main program to detect the relative position of the mouse and the robotic bait and to transmit the motion signal of the robotic bait to the STM32 microcontroller (Figure 1D).

- When the computer receives images from the webcam, process the frames in the Python environment.

- Convert each frame from the RGB color model to the HSV color model using the OpenCV20 module (cv2.cvtColor).

NOTE: Since mice are nocturnal foragers and for the stability of identification, the experiments should be conducted under conditions of low and stable illumination, ensuring consistent lighting conditions in the arena and avoiding partial shadows. The illumination in this experiment was approximately 95 lux. - Acquire mask images of the mouse and robotic bait based on their color ranges in the HSV color model, respectively (cv2.inRange).

- Apply median filtering to the mask image to make the contours of the mouse and robotic bait clearer and more stable (cv2.medianBlur).

- Obtain the contour information of the mouse and the robotic bait using the contour detection function (cv2.findContours), and then find a minimum rectangle that can cover the contour area. Use the center coordinates of this rectangle as the position of the mouse and the robotic bait, respectively, and save them as TXT files.

- Based on the position information of the mouse and the robot bait in each frame, calculate the speed of the mouse and the relative distance to the robot bait and save these parameters as TXT files.

NOTE: Considering the time cost of calculations related to image processing, the actual processing speed of the script is about 41 fps, which means that the system can detect mouse and robotic bait movements in about 24 ms. The system delay was tested between sending a motion command and detecting motion is 59.4 ± 7.3 ms (n = 8), so the delay between the mouse movement and the prey movement triggered by the mouse is about 83 ms (crickets have a reaction time of less than 250 ms22). - Depending on the relative distance between the mouse and the robotic bait and their respective positions in the arena, determine the escape strategy of the robotic bait21,22,23.

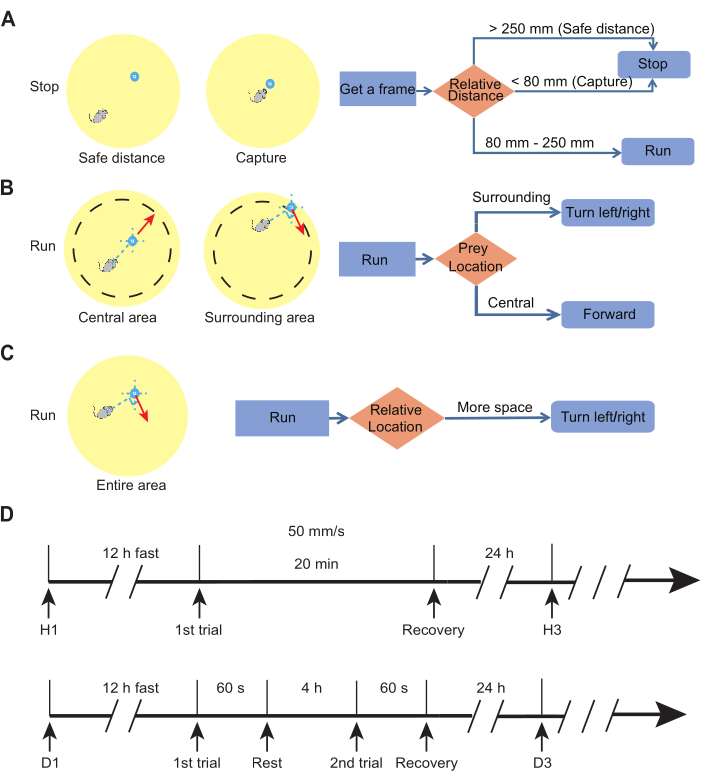

NOTE: If the mouse is relatively far away from the robotic bait (>250 mm), it is assumed that the robotic bait is in a safe zone. Suppose the mouse is close enough to the robotic bait (<80 mm, since the relative distance in this method is determined based on the center of the mouse and the robotic lure, we adjusted this threshold distance based on the body length of the mouse, approximately 11 cm, and the diameter of the lure, 4 cm). In that case, it is considered to be a successful capture, and the robotic bait will stop, allowing the mouse to consume the food pellets on it (Figure 2A). In the straight-line escape strategy, if the bait is in the arena's center area (distance to boundary >75 mm), indicating sufficient space in all escape directions, it will move in the direction that the mouse is moving toward itself. On the contrary, if the bait is close to the arena's wall as indicated by the surrounding area (distance to boundary ≤75 mm), signifying insufficient space for continuous movement, it will make a 90° turn to the side with a greater distance to the arena boundary (more available space, Figure 2B). In the turn-based escape strategy, the robotic bait will turn 90° to the side with more space to escape, judged at 0.5 s intervals (Figure 2C). - After determining the escape strategy, have the Python script encode the speed and direction signals separately and send them to the STM32 via the serial port (ser.port = 'COM3') at a bit rate of 115200.

NOTE: Scripts in STM32 are compiled in C environment. When the speed signal is received, it is converted into a PWM wave of the corresponding frequency by the crystal oscillator frequency divider and output (STM32 crystal frequency is 72000000). After receiving the direction signal, the digital signal is converted by pulling up or down the voltage of the output port (0 or 3.3 V). - Use the serial port burner to burn a compiled C program to the STM32.

- Use the reset program to move the robotic bait to the center of the arena.

NOTE: By detecting the position of the robotic bait in the arena, the robotic bait is continuously driven toward the center position (5 cm/s), and when a distance of <20 mm from the center position is detected, it is considered to have reached the center of the arena and stops moving. Run the reset program before running the main program.

3. Habituation (Figure 2D)

- Fasten the mouse for 12 h to reduce the animal's body weight to 90-95% of normal, with free access to water during this period.

- First, run the reset program to move the robotic bait to the center of the arena, and then isolate the hungry mouse at the edge of the arena with a baffle.

- Set the save path of the file and the moving speed of the robotic bait (5 cm/s) in the main program. Then click Run, and observe in the pop-up video window whether the mouse and robot decoys are recognized stably.

- Remove the baffle and start the timer to observe the predatory behavior of the mouse for 20 min, recording the time when the mouse first take a food pellet from the robotic bait. If the mouse fails to do so, stop the bait and place it next to the mouse for feeding.

- Return the mouse to its cage and allow a 24-h recovery period with free access to food and water. Then, repeat habituation. If there is no significant difference in the time to first food retrieval between two consecutive trials within the same group of animals, consider that the habituation is complete. This period typically takes 3-5 trials.

- After each habituation, clean the arena properly with 75% alcohol and water.

4. Predation task (Figure 2D)

- Begin the predation task immediately after the habituation.

- Fasten the mouse for 12 h to reduce the animal's body weight to 90-95% of normal, with free access to water during this period.

- First, run a reset program to move the robotic bait to the center of the arena, and then isolate the hungry mouse at the edge of the arena with a baffle.

- Set the save path of the file and the moving speed of the robotic bait (0-60 cm/s, different speeds can be set according to experimental needs) in the main program. Then click Run, and observe in the pop-up video window whether the mouse and robotic bait are detected stably.

- Remove the baffle and start the timer to observe the predatory behavior of the mouse for 60 s.

- If the mouse successfully captures the robotic bait within 60 s, close the main program and allow the mouse to eat all the food pellets before being returned to the cage.

- If the mouse does not capture the robotic bait within 60 s, release the mouse directly back into the cage without any reward or punishment.

- Conduct a second round of predation tasks 4 h later, repeating steps 4.3-4.5.

- Allow the mouse a 24-h recovery period with free access to food and water. Then, repeat the predation task. If more than 80% of the mice spend less than 15 s in two consecutive trials, the mice are considered skilled predators.

- After each predation task, clean the arena properly with 75% alcohol and water.

תוצאות

To escape from a predator, prey often employs flexible and variable escape strategies, such as changing escape speeds or fleeing in unpredictable directions21,22,23. In this study, the movement pattern of the robotic bait is flexibly controlled in speed and direction so that we can change the escape direction as well as the speed of the robotic bait to simulate the predation task under different conditions, respectively.

First, we tested the reliability of the platform's controls, the escape speed is adjustable in the range of 0-60 cm/s (the escape speed of crickets was estimated to be in the range of 0-60 cm/s22). The actual speed was monitored by the webcam, and the difference between the set speed and the actual speed was less than 2% (Table 1). We then designed several predation tasks with different escape strategies for the robotic bait:

(i) In the predation task with different escape speeds of robotic bait, the robotic bait was standardized to a straight-line escape strategy (Supplementary Video 1), with one group of the robotic bait escaping at 15 cm/s and the other group of the robotic bait escaping at 30 cm/s.

(iI) In the predation tasks with different escape directions, the speed of the robotic bait was fixed (30 cm/s), and the robotic bait of one group chose a straight-line escape strategy, while the robotic bait of the other group chose a turn-based escape strategy (Supplementary Video 2).

Predation tasks at different speed difficulties

After 4 habituation trials, the time to first capture of the robotic bait was significantly reduced in 16 mice (Figure 3A). This suggests that the mice had adapted to the arena environment and viewed the robotic bait as prey to be captured. The 16 mice were then divided into two groups (8 mice each) and each group performed the predation task with different escape speeds of the robotic bait. When the escape speed of the robotic bait was 15 cm/s, all mice (8/8) were able to successfully capture the robotic bait, whereas when the escape speed was 30 cm/s, only about 62.5% (5/8) of the mice were able to successfully capture the robotic bait (Figure 3B). These results suggest that it is effective to adjust the difficulty of the predation task by varying the escape speed of the robotic bait. Predation time was recorded for all mice, but only those that were ultimately successful in capturing the robotic bait were included in the statistics. Regardless of difficulty, all mice were able to complete the predation task at a consistent time cost (<15 s) after 10 predation tasks (Figure 3C, difficulty of 15 cm/s, n = 8; Figure 3D, difficulty of 30 cm/s, n = 5). And we can clearly observe the search and pursuit phases of the predation process (Figure 3E,F): the pursuit phase is when the mice start moving towards the robotic bait until they catch up with the robotic bait (<8 cm), and the search phase is the phase from when the mice enter the arena until the start of the pursuit. The attack and consumption phases were not included in the statistics because the mice could feed on the food pellets immediately after catching up with the robotic bait. The relative distances and speeds of the mice to the robotic bait during the pursuit phase at different speed difficulties indicated that the platform was capable of reliably inducing mouse pursuit behavior to the robotic bait (Figure 3G,H).

Predation tasks with different escape strategies

Similarly, 16 post-habilitated mice (Figure 4A) were divided into two groups (8 mice each) to perform the predation task with robotic bait with different escape strategies. Since the speed of the robotic bait was 30 cm/s in both cases, and the two groups of mice had consistent predation success rates (Figure 4B, 75%, 6/8), these results suggest that the escape strategy (Figure 4C) of the robotic bait did not significantly alter the difficulty of the predation task, which is consistent with previous studies15. Predation time showed the same trend and a constant time cost (<15 s) after 8 predation trials (Figure 4D, straight-line escape strategy; Figure 4E, turn-based escape strategy). The changes in both relative distance and mouse speed also showed that the platform was able to reliably induce predatory pursuit in mice through different patterns (Figure 4F,G).

Details of the design, hardware configuration, software, and raw data are available from https://github.com/wjcyun/Real-time-interactive-platform.

Figure 1: Hardware and software design of the real-time interactive platform. (A) Panoramic view of the platform. It consists mainly of a webcam at the top, a circular arena in the middle, and a two-dimensional slider at the bottom, with the rest of the table and brackets used for support and anchoring.(B) Top, images captured in the arena by the webcam. Bottom, an enlarged 3D view of the robotic bait (40 mm diameter, 10 mm height).(C) Schematic of the real-time interactive platform. The webcam captures the images in the arena and then sends them to the computer, which generates the movement instructions of the robotic bait and sends them to the STM32. Then, the STM32 converts the digital signals into analog signals and sends them to the servo motors to control the movement of the two-dimensional slide rail, which drives the robotic bait to escape against the pursuit of the mice through the magnetism to form a closed-loop control.(D) Workflow diagram of the main program. Upon receiving images from the webcam, the computer first converts them to the HSV color model. Then, it extracts the silhouettes of the mice and robotic bait based on their color thresholds. The positions of the robotic bait and mouse in the arena are subsequently determined. Finally, the motion pattern of the robotic bait is determined (stop or escape) based on the relative distance between the mouse and the robotic bait, as well as its position in the arena. Please click here to view a larger version of this figure.

Figure 2: Movement strategies of the robotic bait and the experimental procedure. (A) Basic escape strategy of the robotic bait: escape only when the relative distance to the mouse is 8-25 cm. The robotic lure will be stationary when the relative distance between the robotic bait and the mouse is greater than 25 cm or less than 8 cm.(B) Straight-line escape strategy. When the robotic bait is in the central area (>7.5 cm away from the boundary), the robotic bait will escape in the direction that the mouse is pursuing.(C) Turn-based escape strategy. The robotic bait will turn 90 degrees to the side with more space to escape. (D) Top: The flow of habituation. Habituation usually takes 3-5 times. Bottom: The flow of the predation task. Two predation tasks can be performed in a training cycle, each separated by more than 4 h. Please click here to view a larger version of this figure.

Figure 3: Results for the different speed difficulties. (A) Time to first capture the robotic bait during habituation (n = 16, One-way ANOVA).(B) Success rate of the two groups of mice in the predation task at a speed of 15 cm/s and 30 cm/s of the robotic bait, respectively (percentage of animals in each group that successfully completed each predation task, n = 8 in each group).(C,D) Predation time statistics for mice in the predation task (C, n = 8, robotic bait at 15 cm/s; D, n = 5, robotic bait at 30 cm/s. One-way ANOVA).(E) Mouse and robotic bait trajectories during a representative predation task in which the robotic bait used a straight-line escape strategy.(F) Representative results for the relative distance of the mouse to the robotic bait and the speed of the mouse during a single predation task.(G,H) Top: Speed changes in mice during pursuit. Bottom: Changes in the relative distance between the mouse and robotic bait during pursuit (G, n = 8, robotic bait at 15 cm/s; H, n = 5, robotic bait at 30 cm/s). Please click here to view a larger version of this figure.

Figure 4: Results for the different escape strategies. (A) Time to first capture the robotic bait during habituation (n = 16, One-way ANOVA).(B) Success rate of the two groups of mice in the predation task at different escape strategies, respectively (percentage of animals in each group that successfully completed each predation task, n = 8 in each group).(C) Mouse and robotic bait trajectories during a representative predation task in which the robotic bait used a turn-based escape strategy.(D,E) Predation time statistics for mice in the predation task (D, n = 6, straight-line escape strategy; E, n = 6, turn-based escape strategy. One-way ANOVA).(F,G) Top: Speed changes in mice during pursuit. Bottom: Changes in the relative distance between the mouse and robotic bait during pursuit (F, n = 6, straight-line escape strategy; G, n = 6, turn-based escape strategy). Please click here to view a larger version of this figure.

| Setting speed | Average actual speed | Error |

| 100 mm/s | 99.38 mm/s | 0.60% |

| 200 mm/s | 200.95 mm/s | 0.50% |

| 300 mm/s | 303.77 mm/s | 1.30% |

| 400 mm/s | 407.50 mm/s | 1.90% |

| 500 mm/s | 509.17 mm/s | 1.80% |

| 600 mm/s | 607.41 mm/s | 1.20% |

Table 1: Setting speed and actual speed.

Supplementary Figure 1: Signal adaptation circuit. Ports PE6 and PE7 in the STM32 give direction and speed signals, respectively, which are passed through an amplifier circuit to ports 43 and 39 of the servo motor controller. Please click here to download this File.

Supplementary Video 1: Representative predation task in which the robotic bait uses a straight-line escape strategy (30 cm/s) Please click here to download this File.

Supplementary Video 2: Representative predation task in which the robotic bait uses a turn-based escape strategy (30 cm/s). Please click here to download this File.

Discussion

In this protocol, to achieve real-time control with low system latency, we use OpnenCV, a lightweight and efficient computer vision library, and a color model to identify the positions of the mice and the robot decoy. This requires that the lighting in the arena is relatively stable and that the shadows in the arena are avoided as much as possible to avoid interfering with the detection of the black mice. To obtain relatively stable contour detection, we capture the color ranges of the mice with the robotic decoys at several locations in the field prior to the start of the experiment. In addition, the color ranges of the mice and the robotic bait can be changed to satisfy the study in other strains of mice, such as BALB/c (white), or in the effect of food coloring on predatory behavior.

Another point worth mentioning is that in order to achieve lower latency, we only display the result window and there is no save function, which requires the user to save the experimental video into an offline version using other screen recording software. We recommend using the offline processing version of DeepLabCut for keypoint identification24. We have tried to integrate the real-time processing version of DeepLabCut, but there is still a big delay in multi-target multi-keypoint detection25. If there is a better solution, we will be happy to share our experience with the technology of multi-target, multi-keypoint point detection.

We also note that the platform generates mechanical noise (~50 dB) during operation, and the noise comes from three main sources: the operating noise of the servo motors, the noise from the movement of the slides, and the noise generated by the motion friction between the robot decoy and the bottom of the arena. Among them, the working noise of the servo motors can be improved in the habituation phase. And the magnitude of the slide and friction noise is related to the moving speed. In the adaptive training phase, the robot decoy escapes at a speed of 5 cm/s, while in the first experimental phase, when changing the speed magnitude, there will inevitably be a difference in ambient noise. After repeated predation training, this effect was mitigated in subsequent experiments. We also reduced the frictional noise by coating the bottom of the robot decoy with wax.

The platform provides a paradigm for studying predatory pursuit behavior in rodents, particularly in mice. The protocol is not only fast to train but also has a high success rate in the mice that are able to rapidly learn and exhibit significant predatory pursuit behavior toward a robotic bait driven by instinctive appetite without human guidance. The protocol provides the ability to study prey standardization in predatory pursuit behavior and allows the user to customize prey characteristics under different conditions to study the effects on predatory pursuit behavior. The scalable platform allows the use of fiber optic photometry, electrophysiology, and optogenetics to further investigate the neural mechanisms involved in predatory pursuit behavior.

Disclosures

The authors have nothing to disclose.

Acknowledgements

This study is supported by the National Natural Science Foundation of China to YZ (32171001, 32371050).

Materials

| Name | Company | Catalog Number | Comments |

| Acrylic cylinder | SENTAI | PMMA | Diameter 800 mm Height 300 mm Thickness 8 mm |

| Anti-vibration table | VEOO | Custom made | Length 1500 mm Width 1500 mm Height 750 mm |

| Camera | JIERUIWEITONG | HF868SS | Pixel Size 3 µm ´ 3 µm 480P: 120 fps |

| Camera support frame | RUITU | Custom made | Maximum width 3300 mm Maximum height 2600 mm |

| Circuit board | WXRKDZ | Custom made | Length 60 mm Width 40 mm Hole spacing 2.54 mm |

| Computer | DELL | Precision 5820 Tower | Inter(R) Xeon(R) W-2155 CPU NVIDIA GeForce RTX 2080Ti |

| DuPont Line | TELESKY | Custom made | 30 cm |

| Food pellets | Bio-serve | F07595 | 20 mg |

| Platform support frame | HENGDONG | OB3030 | Length 1600 mm Height 900 mm Width 800 mm |

| Regulated power supply | ZHAOXIN | PS-3005D | Output voltage: 0-30 V Output current:0-3 A |

| Round magnetic block | YPE | YPE-230213-5 | Diameter 40 mm Thickness 10 mm |

| Servo Motor Driver | FEREME | FCS860P | 0.1 kw-5.5 kw SVPWM 220 VAC+10% ~-15% RS-485 |

| Slide rail | JUXIANG | JX45 | Length 1000 mm Width 1000 mm |

| Square acrylic plate | SENTAI | PMMA | Length 800 mm Width 800 mm Thickness 10 mm |

| Square Magnetic Block | RUITONG | N35 | Length 100 mm Width 50 mm Thickness 20 mm |

| Stm32 | ZHENGDIANYUANZI | F103 | STM32F103ZET6 72 MHz clock |

| Transistor | Semtech | C118536 | 2N2222A, NPN |

References

- Gregr, E. J. et al. Cascading social-ecological costs and benefits triggered by a recovering keystone predator. Science. 368 (6496), 1243-1247 (2020).

- Brown, J. S. Vincent, T. L. Organization of predator-prey communities as an evolutionary game. Evolution. 46 (5), 1269-1283 (1992).

- Zhao, Z.-D. et al. Zona incerta gabaergic neurons integrate prey-related sensory signals and induce an appetitive drive to promote hunting. Nat Neurosci. 22 (6), 921-932 (2019).

- Hoy, J. L., Yavorska, I., Wehr, M., Niell, C. M. Vision drives accurate approach behavior during prey capture in laboratory mice. Curr Biol. 26 (22), 3046-3052 (2016).

- Holmgren, C. D. et al. Visual pursuit behavior in mice maintains the pursued prey on the retinal region with least optic flow. eLife. 10, e70838 (2021).

- Johnson, K. P. et al. Cell-type-specific binocular vision guides predation in mice. Neuron. 109 (9), 1527-1539.e1524 (2021).

- Nahin, P. J. Chases and Escapes: The Mathematics of Pursuit and Evasion. Princeton University Press (2007).

- Mcghee, K. E., Pintor, L. M., Bell, A. M. Reciprocal behavioral plasticity and behavioral types during predator-prey interactions. Am Nat. 182 (6), 704-717 (2013).

- Belyaev, R. I. et al. Running, jumping, hunting, and scavenging: Functional analysis of vertebral mobility and backbone properties in carnivorans. J Anat. 244 (2), 205-231 (2024).

- Weihs, D. Webb, P. W. Optimal avoidance and evasion tactics in predator-prey interactions. J Theor Biol. 106 (2), 189-206 (1984).

- Peterson, A. N., Soto, A. P., Mchenry, M. J. Pursuit and evasion strategies in the predator-prey interactions of fishes. Integr Comp Biol. 61 (2), 668-680 (2021).

- Galvin, L., Mirza Agha, B., Saleh, M., Mohajerani, M. H., Whishaw, I. Q. Learning to cricket hunt by the laboratory mouse (mus musculus): Skilled movements of the hands and mouth in cricket capture and consumption. Behav Brain Res. 412, 113404 (2021).

- Timberlake, W. Washburne, D. L. Feeding ecology and laboratory predatory behavior toward live and artificial moving prey in seven rodent species. Anim Learn Behav. 17 (1), 2-11 (1989).

- Sunami, N. et al. Automated escape system: Identifying prey's kinematic and behavioral features critical for predator evasion. J Exp Biol. 227 (10), jeb246772 (2024).

- Szopa-Comley, A. W. Ioannou, C. C. Responsive robotic prey reveal how predators adapt to predictability in escape tactics. Proc Natl Acad Sci U S A. 119 (23), e2117858119 (2022).

- Krause, J., Winfield, A. F. T., Deneubourg, J.-L. Interactive robots in experimental biology. Trends Ecol Evol. 26 (7), 369-375 (2011).

- Swain, D. T., Couzin, I. D., Leonard, N. E. Real-time feedback-controlled robotic fish for behavioral experiments with fish schools. Proceedings of the IEEE. 100 (1), 150-163 (2012).

- Eyal, R. Shein-Idelson, M. Preytouch: An automated system for prey capture experiments using a touch screen. bioRxiv. 2024.2006.2016.599188 (2024).

- Ioannou, C. C., Rocque, F., Herbert-Read, J. E., Duffield, C., Firth, J. A. Predators attacking virtual prey reveal the costs and benefits of leadership. Proc Natl Acad Sci U S A. 116 (18), 8925-8930 (2019).

- Farhan, A. et al. An OpenCV-based approach for automated cardiac rhythm measurement in zebrafish from video datasets. Biomolecules. 11 (10), 1476 (2021).

- Hein, A. M. et al. An algorithmic approach to natural behavior. Curr Biol. 30 (11), R663-R675 (2020).

- Kiuchi, K., Shidara, H., Iwatani, Y., Ogawa, H. Motor state changes escape behavior of crickets. iScience. 26 (8), 107345 (2023).

- Wilson-Aggarwal, J. K., Troscianko, J. T., Stevens, M., Spottiswoode, C. N. Escape distance in ground-nesting birds differs with individual level of camouflage. Am Nat. 188 (2), 231-239 (2016).

- Mathis, A. et al. Deeplabcut: Markerless pose estimation of user-defined body parts with deep learning. Nat Neurosci. 21 (9), 1281-1289 (2018).

- Kane, G. A., Lopes, G., Saunders, J. L., Mathis, A., Mathis, M. W. Real-time, low-latency closed-loop feedback using markerless posture tracking. eLife. 9, e61909 (2020).

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved