Method Article

Brain-Computer Interface-controlled Upper Limb Robotic System for Enhancing Daily Activities in Stroke Patients

In This Article

Summary

This study introduces a brain-computer interface (BCI) system for stroke patients, which combines electroencephalography and electrooculography signals to control an upper limb robotic hand, enhancing daily activities. The evaluation used the Berlin Bimanual Test for Stroke (BeBiTS).

Abstract

This study introduces a Brain-Computer Interface (BCI)-controlled upper limb assistive robot for post-stroke rehabilitation. The system utilizes electroencephalogram (EEG) and electrooculogram (EOG) signals to help users assist upper limb function in everyday tasks while interacting with a robotic hand. We evaluated the effectiveness of this BCI-robot system using the Berlin Bimanual Test for Stroke (BeBiTS), a set of 10 daily living tasks involving both hands. Eight stroke patients participated in this study, but only four participants could adapt to the BCI robot system training and perform the postBeBiTS. Notably, when the preBeBiTS score for each item was four or less, the BCI robot system showed greater assistive effectiveness in the postBeBiTS assessment. Furthermore, our current robotic hand does not assist with arm and wrist functions, limiting its use in tasks requiring complex hand movements. More participants are required to confirm the training effectiveness of the BCI-robot system, and future research should consider using robots that can assist with a broader range of upper limb functions. This study aimed to determine the BCI-robot system's ability to assist patients in performing daily living activities.

Introduction

Impairment of upper extremity function due to stroke limits the ability to perform daily activities, especially bimanual tasks1. Hand rehabilitation is, therefore, a key component of stroke rehabilitation, with mirror therapy2 and Constraint-Induced Movement Therapy (CIMT)3 being well-known approaches. Recent research indicates that EEG-based Brain-Computer Interface (BCI) robot systems can be an effective assistive therapy for improving hand function recovery in stroke patients4,5,6. BCI robotic systems focus on coupling the patient's active intention to attempt a motor movement with its performance. Research is actively being conducted to determine whether this approach is effective for rehabilitation7,8,9,10,11,12,13.

In this study, we present a BCI-controlled upper limb assistive robotic system designed to help stroke patients perform bimanual activities. The system utilizes electroencephalograms (EEG) to detect and interpret brain signals associated with motor imagery and combines them with electrooculograms (EOG) for additional control inputs. These neurophysiological signals enable patients to control a robotic hand that assists with finger movements14. This approach bridges the gap between a patient's desire to move and physical ability, potentially facilitating motor recovery and increasing independence in daily tasks.

Researchers at the Charité Medical University in Berlin developed the Berlin Bimanual Test for Stroke (BeBiTS), a comprehensive assessment tool, to evaluate the efficacy of this BCI robotic system15. The BeBiTS provides a quantitative measure of functional improvement by assessing the ability to perform ten bimanual activities essential to daily living. The assessment scores each task individually and evaluates five components of hand function: reaching, grasping, stabilizing, manipulating, and lifting. It enables a comprehensive evaluation of patients' functional improvements, focusing on activities of daily living. Furthermore, it allows us to quantify the contribution of the BCI robot system in enhancing specific hand functions. This study, therefore, aims to develop an effective BCI assistive robot system by comparing BeBiTS scores before and after training sessions in stroke patients.

Protocol

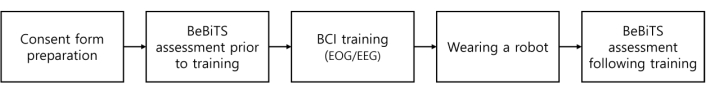

The Seoul National University Bundang Hospital Institutional Review Board reviewed and approved all experimental procedures (IRB No. B-2205-756-003). We recruited eight stroke patients and thoroughly explained the relevant details before obtaining their consent. After obtaining informed consent, the protocol proceeds as follows: we perform a BeBiTS assessment before BCI training, followed by BCI training using EOG and EEG. Afterward, participants wear the robot to perform another BeBiTS assessment (Figure 1).

1. BCI-robot training system setup

- Patient recruitment

- Perform the screening process using the following inclusion criteria.

- Select patients aged 20 to 68 with impaired upper limb functions.

- Select patients who are unable to flex or extend the fingers of the paralyzed hand.

- Select patients with a single subcortical stroke (including both ischemic and hemorrhagic stroke).

- Select patients who are more than 6 months post brain injury.

- Select patients with an impaired Fugl-Meyer score of less than 31.

- Provide all recruited patients with detailed information about the experimental procedure and obtain signed informed consent.

NOTE: A legal representative must provide the signed informed consent If a patient meets the criteria but cannot sign the consent.

- Perform the screening process using the following inclusion criteria.

- BCI system: EEG device

- Use a EEG viewer system (see the Table of Materials) for data recording.

- Use a personal computer (PC) with custom BCI software and connect the PC to the EEG device.

- Upper Limb assistance robotic hand

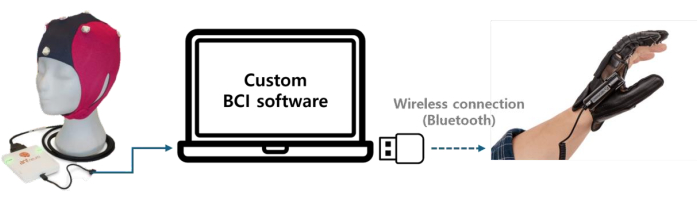

- An assistive robotic hand16 that supports three fingers is used on the patient's impaired upper limb. This robot is a soft, leather-like glove designed to cover only three fingers: the thumb, index, and middle fingers. Connect the robot wirelessly to the computer using a USB dongle.

- When connected to the computer via a dongle, the robot's initial state is neutral, meaning that its hand is fully open. The designed BCI system sets up the robot so that its hand can only close when it recognizes the intention to make a fist, based on whether the measured EEG value meets the threshold. Figure 2 shows the schematic of this BCI-robot system.

2. BCI-robot assessment

- Berlin Bimanual Test for Stroke (BeBiTS)

NOTE: The BeBiTS assessment is a tool for evaluating the performance of 10 bimanual activities of daily living in stroke patients.- Position the patient comfortably in an armchair facing a desk. Ensure the patient is positioned close to the desk, approximately 30 cm away from the objects used in the assessment, to perform the evaluation tasks using both arms and hands.

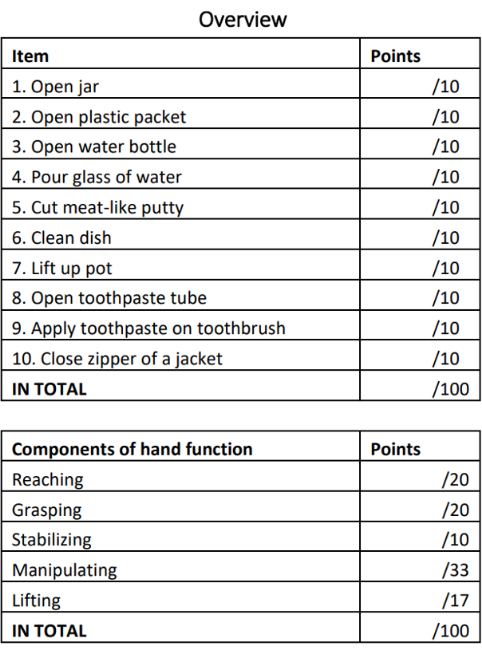

- Keep the following 10 items for the assessment of bimanual activities: Open a jar; open a plastic packet; open a water bottle; pour a glass of water; cut meat-like putty; clean a dish; lift a pot; open a toothpaste tube; apply toothpaste on a toothbrush; close the zipper of a jacket.

NOTE: The 10 evaluation tasks use assessment items, all of which are performed sitting at a desk. Each action is scored out of 10, with an overall score of 100. - Evaluate and score five hand function components alongside the individual item scores. These components and their respective scores are reaching (20), grasping (20), stabilizing (10), manipulating (33), and lifting (17), with the total score adding up to 100 points (Figure 3).

3. BCI-robot training system

- EEG setup

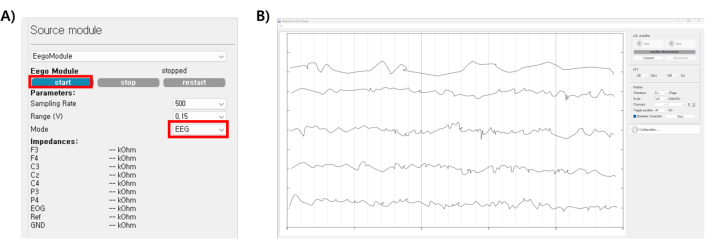

- Open the BCI system. Figure 4 shows the entire screen when the BCI system is opened.

- Put on a cap and connect the amplifier.

- In the Source module, select EegoModule | impedance mode. Press Start in the source module, which shows blue light (Figure 5A).

- Ensure the impedances are below 10k Ohms, then press Stop in the source module.

- Change the mode to EEG to stream the data and press start. Check the signal with the given software (Figure 5B).

- EOG calibration

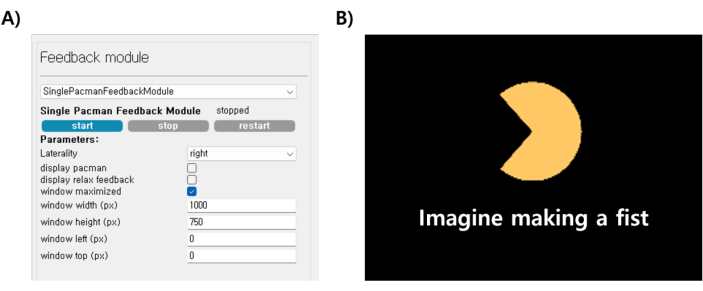

- In the preprocessing module, select the Pipeline specific to the EOG montage (e.g., SMR-EOGleft for EOG electrode on the left eye; Figure 6A).

- In the Task module, set the Number of cues (e.g., 10). The Task module sets Directions contralateral to the robotic hand (Figure 6B).

- Instruct the participant to perform brief lateral eye movements in the direction of the 10 arrows that appear (Figure 6C).

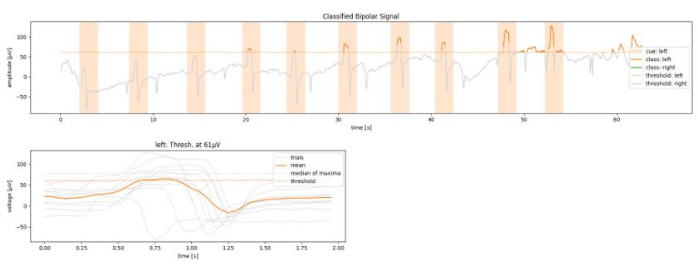

- Check the result graph immediately after training. The gray lines represent each eye movement attempt; confirm that the orange line, which is the average, touches the baseline, indicating that the training is successful (Figure 7).

- If the training is successful, record the threshold parameter value.

NOTE: Consistent repetition of eye movements is essential. Although it varies from participant to participant, EOG training is generally practical after about three attempts and usually improves with repeated training.

- EEG calibration

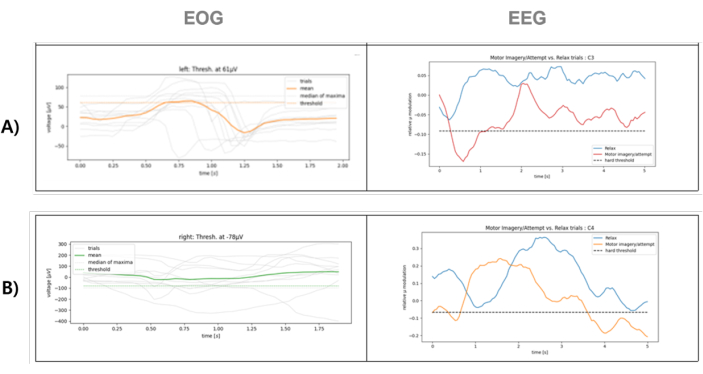

- In the Task module, choose EEGCalibrationTaskModule (Figure 8A). Set the Number of cues in the Task module to 5.

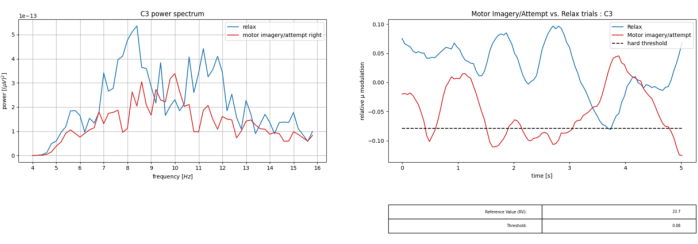

- In the Feedback module, set Laterality to the side of the robotic hand and ensure Display Pac-Man is unselected (Figure 8B).

- Instruct the participant to imagine clenching a fist when the prompt 'Imagine making a fist' appears on the black screen (Figure 8C). Ask the participant to relax when the screen is black. Repeat this process, with the fist-clenching imagination lasting 5 s and the rest period randomly varying between 10 s to 15 s.

- Review the result graph after EEG calibration and use the characteristic ERD pattern in the 8-12 Hz mu-band during motor imagery to determine the participant's appropriate frequency range. Record the parameter value of 11 Hz from the left graph in Figure 9, which shows the ERD response during motor imagery compared to the rest state. In the right graph of Figure 9, highlight the distinct red line, which represents fist-clenching imagery, and the blue line, indicating the relaxed state, in relation to the horizontal dotted threshold line.

- If the EEG calibration training is adequately performed, record the reference value and threshold below the right-hand graph.

NOTE: However, there may be cases where short-term calibration training is challenging due to issues such as lack of understanding of the instructions provided or BCI-illiteracy.

- Training with feedback

- After completing EOG and EEG training, set parameter values that distinguish the intention of making a fist for the specific target frequency of interest, reference value, and threshold identified by the result graph in the previous training.

- Using the configured parameters, proceed with feedback training using Pac-Man. If the parameters are correctly set after training, observe that Pac-Man's mouth will gradually close when the participant imagines clenching their fist. If Pac-Man's mouth does not close properly, repeat the EEG calibration training while adjusting the reference value and threshold (Figure 10).

- Experiment: BeBiTS assessment using the BCI robot system

- After completing all feedback training (approximately 30 minutes), have the participant wear the robot and conduct the postBeBiTS assessment using the trained BCI system.

NOTE: If the patient's hand spasticity is severe, special care is needed when wearing the robot. Additionally, the three-finger assistance provided by the robot may not be sufficient for some patients, making it difficult to perform all the movements required in the BeBiTS assessment. In such cases, only the movements that can be performed are evaluated. - Wait for the white light indicating the ready and start state to appear on the screen, as shown in Figure 11A. After confirming the white light, the participants move their eyes to one side to change the light to green (Figure 11B). When the green light appears, the participants imagine clenching their fist.

NOTE: If the system recognizes the participant's intention well, it activates the robot worn by the participant to clench its fist. - Have the participant clench their fist with the help of the robot, and then perform the action shown in Figure 11C.

- Figure 11D displays a red light, indicating that the robot is maintaining a fist-clenched position. Upon completing the action, the participant observes this red light on the screen, as illustrated in Figure 11D. At this point, if the participant wants to open their hand again with the help of the robot, they can move their eyes to change the light color back to white.

- After completing all feedback training (approximately 30 minutes), have the participant wear the robot and conduct the postBeBiTS assessment using the trained BCI system.

Results

Figure 12 shows the results of EOG and EEG training. Figure 12A represents the results of a well-trained participant. The EOG training values are consistent, with the average (orange bold line) properly reaching the threshold line. The EEG training results also clearly distinguish between the blue (resting state) and the red (motor imagery) lines.

In contrast, Figure 12B shows the results of a participant who did not train well. The EOG trials are inconsistent, and the average (green bold line) does not reach the threshold line. Moreover, the EEG training results do not clearly distinguish between the resting state and motor imagery.

Table 1 presents the BeBiTS assessment scores for all eight participants. We conducted the BeBiTS assessment before (pre) and after (post) BCI system training. Participants P1, P4, and P5 could not score on almost all items during both BeBiTS assessments. Participant P3 scored in the preBeBiTS assessment, but due to inadequate training with the BCI robot system, they failed to score in the postBeBiTS assessment using the BCI robot system. The remaining participants (P2, P6-P8) scored on some of the performable items in the postBeBiTS assessment.

Figure 1: Flowchart of the entire protocol progression. Please click here to view a larger version of this figure.

Figure 2: Schematic of the BCI-robot system. Abbreviation: BCI = Brain-controlled interface. Please click here to view a larger version of this figure.

Figure 3: BeBiTS assessment score sheet. The score is based on ten daily living performance items and five hand function assessment items, which include reaching, grasping, stabilizing, manipulating, and lifting. The total score is 100 points. Abbreviation: BeBiTS = Berlin Bimanual Test for Stroke. Please click here to view a larger version of this figure.

Figure 4: Full-screen view of the BCI program. Abbreviation: BCI = Brain-controlled interface. Please click here to view a larger version of this figure.

Figure 5: Checked the EEG impedance of the BCI program. Abbreviations: EEG = electroencephalography; BCI = brain-controlled interface. Please click here to view a larger version of this figure.

Figure 6: EOG calibration process and EOG training screen. (A) Preprocessing module, (B) EOG calibration task module, (C) The screen viewed by the patient during EOG training. The patient is instructed to move their eyes in the direction indicated by the arrows. Abbreviation: EOG = electrooculography. Please click here to view a larger version of this figure.

Figure 7: Graph of results after EOG calibration. The participant's trained EOG parameter values are verified. Abbreviation: EOG = electrooculography. Please click here to view a larger version of this figure.

Figure 8: EEG training steps and instruction screen for discriminating motor intention when imagining making a fist. (A) EEG calibration task module, (B) Feedback module, (C) The screen viewed by the patient during EEG training. The patient is instructed to imagine clenching a fist as directed on the screen. Abbreviation: EEG = electroencephalography. Please click here to view a larger version of this figure.

Figure 9: Graphs of training results are displayed when the EEG calibration is complete. Abbreviation: EEG = electroencephalography. Please click here to view a larger version of this figure.

Figure 10: Feedback process and screen view using Pac-Man. (A) Feedback module, (B) The screen viewed by the patient during Pac-man training. As instructed on the screen, when the patient imagines clenching their fist, Pac-man's mouth closes smoothly if the training is successful. Please click here to view a larger version of this figure.

Figure 11: Schematic diagram of the BCI system process. (A) After wearing the robot, a white light appears on the screen as the initial stage for using the BCI robot system. The white light indicates that the system is ready to be used, (B) Participants can change the light to green by moving their eyes. When the green light appears, the participant imagines clenching their fist, (C) Once the fist is clenched with the assistance of the robot, the participant performs the action, (D) The red light signifies that the hand is in a clenched position with the help of the robot. If participants want to open their hand again, move their eyes to return to the ready state. Abbreviation: BCI = Brain-controlled interface. Please click here to view a larger version of this figure.

Figure 12: Results of EOG and EEG training for using the BCI system. (A) Well-trained case, (B) poorly trained case. Please click here to view a larger version of this figure.

Table 1: Scores for all participants on the ten BeBiTS items pre- and post-BCI training. Abbreviations: BeBiTS = Berlin Bimanual Test for Stroke; BCI = Brain-controlled interface. Please click here to download this Table.

Discussion

This research presented a BCI upper limb assistive robotic system to support stroke patients in executing daily tasks. We assessed the efficacy of bimanual tasks through the BeBiTS test15 and implemented training for the operation of the upper limb assistive robot via the BCI system14. This approach, in contrast to conventional rehabilitation procedures, allows patients to actively engage in their recovery by controlling the robot's operations according to their intentions. Accurately calibrating the EOG and EEG training is crucial to obtaining precise signals from the BCI system to control the robot. Additionally, it is essential to ensure that the robot comfortably fits the user's hand.

This study involved eight participants, which presented a limited sample size, constraining our ability to evaluate the effectiveness of the BCI robot training system definitively. Nevertheless, we identified several notable characteristics from these participants' BCI system training results. First, participants often found the EOG training relatively easy. However, they struggled with the EEG training, which required differentiation between motor imagery and rest. Only four of the eight individuals in the study could adapt to the BCI robotic system training and perform the postBeBiTS assessment. Moreover, after interacting with the robot, the participants completed only a few items of the BeBiTS assessment. While all four participants consistently completed items 6 and 7, their participation in the remaining items varied based on their hand function. The main reason for this was that the robotic hand used in this study provided assistance only for three fingers, limiting its effectiveness in tasks that require stability or movement of the arm and wrist.

Particularly, individuals with a preBeBiTS score of 4 or lower on each item demonstrated the positive effects of the BCI robotic system in the postBeBiTS evaluation. This insight highlights the specific patient conditions that the robot effectively supports, but additional research is necessary for verification.

To optimize the implementation of BCI systems, it is crucial to reduce training time and minimize variability among users. Enhancing the effectiveness of BCI training through a robot that utilizes all five fingers and strengthens arm power could yield improved results in future BeBiTS assessments. Moreover, large-scale testing is essential for advancing stroke rehabilitation outcomes. Lastly, integrating sensors such as electromyography measurements or developing home-based BCI robot systems that users can operate independently presents promising alternatives for stroke rehabilitation.

Disclosures

The authors have no conflicts of interest to declare.

Acknowledgements

This work was supported by the German - Korean Academia-Industry International Collaboration Program on Robotics and Lightweight Construction/Carbon Funded by the Federal Ministry of Education and Research of the Federal Republic of Germany and Korean Ministry of Science and ICT (Grant No. P0017226)

Materials

| Name | Company | Catalog Number | Comments |

| BCI2000 | open-source | general-purpose software system for brain-computer interface (BCI) research that is free for non-commercial use | |

| BrainVision LSL Viewer | Brain Products GmbH | a handy tool to monitor its LSL EEG and marker streams. | |

| eego mini amplifier with 8-channel (F3, F4, C3, Cz, C4, P3, P4, EOG) waveguard original caps | Ant Neuro, Netherlands | Compact and lightweight design: The eego mini amplifier is small and lightweight, offering excellent portability and suitability for EEG recording in various environments. | |

| Neomano | neofect, Korea | Glove Material: Leather, velcro, Non-slip cloth Wire Material: Synthetic Thread Weight: 65 g (without batt.) cover three fingers: the thumb, index, and middle fingers | |

| personal computer (PC) with custom BCI software | window laptop |

References

- Ekstrand, E., Rylander, L., Lexell, J., Brogårdh, C. Perceived ability to perform daily hand activities after stroke and associated factors: a cross-sectional study. BMC Neurol. 16, 208 (2016).

- Angerhöfer, C., Colucci, A., Vermehren, M., Hömberg, V., Soekadar, S. R. Post-stroke rehabilitation of severe upper limb paresis in Germany-toward long-term treatment with brain-computer interfaces. Front. Neurol. 12, 772199 (2021).

- Corbetta, D., Sirtori, V., Castellini, G., Moja, L., Gatti, R. Constraint-induced movement therapy for upper extremities in people with stroke. Cochrane Database Syst Rev. 2015 (10), CD004433 (2015).

- Kutner, N. G., Zhang, R., Butler, A. J., Wolf, S. L., Alberts, J. L. Quality-of-life change associated with robotic-assisted therapy to improve hand motor function in patients with subacute stroke: a randomized clinical trial. Phys Ther. 90 (4), 493-504 (2010).

- Baniqued, P. D. E., et al. Brain-computer interface robotics for hand rehabilitation after stroke: a systematic review. J Neuroeng Rehabil. 18 (1), 15 (2021).

- Yang, S., et al. Exploring the use of brain-computer interfaces in stroke neurorehabilitation. BioMed Res Int. 2021, 9967348 (2021).

- Wolpaw, J. R. Brain-computer interfaces. Handb Clin Neurol. 110, 67-74 (2013).

- Teo, W. P., Chew, E. Is motor-imagery brain-computer interface feasible in stroke rehabilitation. PM R. 6 (8), 723-728 (2014).

- Gomez-Rodriguez, M., et al. Towards brain-robot interfaces in stroke rehabilitation. IEEE Int Conf Rehabil Robot. 2011, 5975385 (2011).

- Silvoni, S., et al. Brain-computer interface in stroke: a review of progress. Clin EEG Neurosci. 42 (4), 245-252 (2011).

- Soekadar, S. R., Birbaumer, N., Cohen, L. G., Kansaku, K., Cohen, L. G. Brain-computer interfaces in the rehabilitation of stroke and neurotrauma. Systems Neuroscience and Rehabiliation. , 3-18 (2011).

- Said, R. R., Heyat, M. B. B., Song, K., Tian, C., Wu, Z. A systematic review of virtual reality and robot therapy as recent rehabilitation technologies using EEG-brain-computer interface based on movement-related cortical potentials. Biosensors. 12 (12), 1134 (2022).

- Daly, J. J., Huggins, J. E. Brain-computer interface: current and emerging rehabilitation applications. Arch Phys Med Rehabil. 96 (3 Suppl), S1-S7 (2015).

- Soekadar, S. R., Witkowski, M., Vitiello, N., Birbaumer, N. An EEG/EOG-based hybrid brain-neural computer interaction (BNCI) system to control an exoskeleton for the paralyzed hand. Biomed Tech (Berl). 60 (3), 199-205 (2015).

- Angerhöfer, C., et al. The Berlin Bimanual Test for Tetraplegia (BeBiTT) to assess the impact of assistive hand exoskeletons on bimanual task performance. J NeuroEng Rehabil. 20, 17 (2023).

- . Wearable Robot Hand Assistance Available from: https://www.neofect.com/kr/neomano (2024)

Reprints and Permissions

Request permission to reuse the text or figures of this JoVE article

Request PermissionThis article has been published

Video Coming Soon

Copyright © 2025 MyJoVE Corporation. All rights reserved